基於corosync和pacemaker+drbd實現mfs高可用

基於corosync和pacemaker+drbd實現mfs高可用

一、MooseFS簡介

1、介紹

MooseFS是一個具備冗余容錯功能的分布式網絡文件系統,它將數據分別存放在多個物理服務器單獨磁盤或分區上,確保一份數據有多個備份副本。因此MooseFS是一中很好的分布式存儲。但是基於的是單節點的,對公司企業來說是不適合用的,所以今天我們通過corosync和pacemaker+drbd實現mfs高可用的部署,讓mfs不再單點工作。

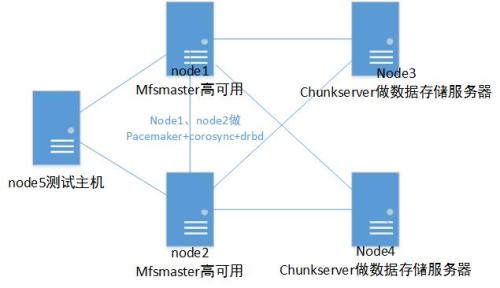

拓撲圖如下:

我們最主要的是在node1和node2上實現高可用就可以實現moosefs的高可用了

好了現在我們來正式部署

環境主機-centos7:

node1:172.25.0.29 node2:172.25.0.30 (都為mfsmaster,做高可用)

node3: 172.25.0.33 node4: 172.25.0.34 (都為mfscheckservers,用來存儲數據)

node5: 172.25.0.35 (測試主機)

Mysql,drbd已經安裝好,我們重新初始化一下資源:搭建參考文檔:

http://xiaozhagn.blog.51cto.com/13264135/1975397

Corosync和Pacemaker

二、MFSmaster安裝

1、node1上下載壓縮包:

[root@node1 ~]# yum install zlib-devel -y ##安裝依賴包 [root@node1 ~]# cd /usr/local/src/ [root@node2 src]# wget https://github.com/moosefs/moosefs/archive/v3.0.96.tar.gz ###下載mfs包

下載完後我們先把我們搭建好的的/dev/drbd1 掛載到/usr/local/mfs上

2、掛載drbd

[root@node1 src]# mkdir /usr/local/mfs 新建mfs目錄

[root@node1 src]# cat /proc/drbd 查看drbd的模式,可用看到node1為主了

version: 8.4.10-1 (api:1/proto:86-101)

GIT-hash: a4d5de01fffd7e4cde48a080e2c686f9e8cebf4c build by mockbuild@, 2017-09-15 14:23:22

1: cs:Connected ro:Primary/Secondary ds:UpToDate/UpToDate C r-----

ns:8192 nr:0 dw:0 dr:9120 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:0

[root@node1 src]# mkfs.xfs -f /dev/drbd1 ###重新格式一遍磁盤,以免有問題

meta-data=/dev/drbd1 isize=512 agcount=4, agsize=131066 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=524263, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

You have new mail in /var/spool/mail/root

[root@node2 src]# useradd mfs

[root@node1 src]#chown -R mfs:mfs /usr/local/mfs/

[root@node1 src]# mount /dev/drbd1 /usr/local/mfs/ ##把drbd掛載到mfs目錄下

[root@node1 src]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/cl-root 18G 2.5G 16G 14% /

devtmpfs 226M 0 226M 0% /dev

tmpfs 237M 0 237M 0% /dev/shm

tmpfs 237M 4.6M 232M 2% /run

tmpfs 237M 0 237M 0% /sys/fs/cgroup

/dev/sda1 1014M 197M 818M 20% /boot

tmpfs 48M 0 48M 0% /run/user/0

/dev/drbd1 2.0G 33M 2.0G 2% /usr/local/mfs##可以看到drbd已經掛載成功了

3、安裝mfsmaster

[root@node1 src]# tar -xf v3.0.96.tar.gz [root@node1 src]# cd moosefs-3.0.96/ [root@node1 moosefs-3.0.96]# ./configure --prefix=/usr/local/mfs --with-default-user=mfs --with-default-group=mfs --disable-mfschunkserver --disable-mfsmount [root@node1 moosefs-3.0.96]# make && make install ##編譯安裝 [root@node1 moosefs-3.0.96]# cd /usr/local/mfs/etc/mfs/ [root@node1 mfs]# ls mfsexports.cfg.sample mfsmaster.cfg.sample mfsmetalogger.cfg.sample mfstopology.cfg.sample [root@node1 mfs]# mv mfsexports.cfg.sample mfsexports.cfg [root@node1 mfs]# mv mfsmaster.cfg.sample mfsmaster.cfg

## 看一下默認配置的參數:

[root@node1 mfs]# vim mfsmaster.cfg # WORKING_USER = mfs # 運行 master server 的用戶 # WORKING_GROUP = mfs # 運行 master server 的組 # SYSLOG_IDENT = mfsmaster # 是master server在syslog中的標識,也就是說明這是由master serve產生的 # LOCK_MEMORY = 0 # 是否執行mlockall()以避免mfsmaster 進程溢出(默認為0) # NICE_LEVEL = -19 # 運行的優先級(如果可以默認是 -19; 註意: 進程必須是用root啟動) # EXPORTS_FILENAME = /usr/local/mfs-1.6.27/etc/mfs/mfsexports.cfg # 被掛載目錄及其權限控制文件的存放路徑 # TOPOLOGY_FILENAME = /usr/local/mfs-1.6.27/etc/mfs/mfstopology.cfg # mfstopology.cfg文件的存放路徑 # DATA_PATH = /usr/local/mfs-1.6.27/var/mfs # 數據存放路徑,此目錄下大致有三類文件,changelog,sessions和stats; # BACK_LOGS = 50 # metadata的改變log文件數目(默認是 50) # BACK_META_KEEP_PREVIOUS = 1 # metadata的默認保存份數(默認為1) # REPLICATIONS_DELAY_INIT = 300 # 延遲復制的時間(默認是300s) # REPLICATIONS_DELAY_DISCONNECT = 3600 # chunkserver斷開的復制延遲(默認是3600) # MATOML_LISTEN_HOST = * # metalogger監聽的IP地址(默認是*,代表任何IP) # MATOML_LISTEN_PORT = 9419 # metalogger監聽的端口地址(默認是9419) # MATOML_LOG_PRESERVE_SECONDS = 600 # MATOCS_LISTEN_HOST = * # 用於chunkserver連接的IP地址(默認是*,代表任何IP) # MATOCS_LISTEN_PORT = 9420 # 用於chunkserver連接的端口地址(默認是9420) # MATOCL_LISTEN_HOST = * # 用於客戶端掛接連接的IP地址(默認是*,代表任何IP) # MATOCL_LISTEN_PORT = 9421 # 用於客戶端掛接連接的端口地址(默認是9421) # CHUNKS_LOOP_MAX_CPS = 100000 # chunks的最大回環頻率(默認是:100000秒) # CHUNKS_LOOP_MIN_TIME = 300 # chunks的最小回環頻率(默認是:300秒) # CHUNKS_SOFT_DEL_LIMIT = 10 # 一個chunkserver中soft最大的可刪除數量為10個 # CHUNKS_HARD_DEL_LIMIT = 25 # 一個chuankserver中hard最大的可刪除數量為25個 # CHUNKS_WRITE_REP_LIMIT = 2 # 在一個循環裏復制到一個chunkserver的最大chunk數目(默認是1) # CHUNKS_READ_REP_LIMIT = 10 # 在一個循環裏從一個chunkserver復制的最大chunk數目(默認是5) # ACCEPTABLE_DIFFERENCE = 0.1 # 每個chunkserver上空間使用率的最大區別(默認為0.01即1%) # SESSION_SUSTAIN_TIME = 86400 # 客戶端會話超時時間為86400秒,即1天 # REJECT_OLD_CLIENTS = 0 # 彈出低於1.6.0的客戶端掛接(0或1,默認是0)

##因為是官方的,默認配置,我們投入即可使用。

接下來我們要修改控制文件

[root@node1 mfs]# vim mfsexports.cfg * / rw,alldirs,mapall=mfs:mfs,password=xiaozhang * . rw

##mfsexports.cfg 文件中,每一個條目就是一個配置規則,而每一個條目又分為三個部分,其中第一部分是mfs客戶端的ip地址或地址範圍,第二部分是被掛載的目錄,第三個部分用來設置mfs客戶端可以擁有的訪問權限。

下一步:開啟元數據文件默認是empty文件,需要我們手工打開:

[root@node1 mfs]# cp /usr/local/mfs/var/mfs/metadata.mfs.empty /usr/local/mfs/var/mfs/metadata.mfs

4、啟動測試:

[root@node1 mfs]# /usr/local/mfs/sbin/mfsmaster start (沒有問題就做啟動腳本):

5、啟動腳本:

[root@node2 mfs]# cat /etc/systemd/system/mfsmaster.service [Unit] Description=mfs After=network.target [Service] Type=forking ExecStart=/usr/local/mfs/sbin/mfsmaster start ExecStop=/usr/local/mfs/sbin/mfsmaster stop PrivateTmp=true [Install] WantedBy=multi-user.target [root@node1 mfs]# chmod a+x /etc/systemd/system/mfsmaster.service [root@node1 mfs]# systemctl enable mfsmaster ##添加開機啟動: [root@node1 mfs]# systemctl stop mfsmaster [root@node1 ~]# umount -l /usr/local/mfs/ ##然後把/dev/drbd1 umountd掉

6、接下來在node2執行操作:

[root@node2 ~]# mkdir -p /usr/local/mfs [root@node2 ~]# useradd mfs [root@node2 ~]# chown -R mfs:mfs /usr/local/mfs/

查看下drbd狀態:

[root@node2 ~]# cat /proc/drbd

version: 8.4.10-1 (api:1/proto:86-101)

GIT-hash: a4d5de01fffd7e4cde48a080e2c686f9e8cebf4c build by mockbuild@, 2017-09-15 14:23:22

1: cs:Connected ro:Secondary/Primary ds:UpToDate/UpToDate C r-----

ns:0 nr:25398 dw:25398 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:0

You have new mail in /var/spool/mail/root###為從drbd正常

##創建啟動mfsmaster腳本

[root@node2 ~]# cat /etc/systemd/system/mfsmaster.service [Unit] Description=mfs After=network.target [Service] Type=forking ExecStart=/usr/local/mfs/sbin/mfsmaster start ExecStop=/usr/local/mfs/sbin/mfsmaster stop PrivateTmp=true [Install] WantedBy=multi-user.target [root@node2 ~]# chmod a+x /etc/systemd/system/mfsmaster.service

7、配置mfsmaster高可用(在node1上配)

[root@node1 ~]# crm crm(live)# status ##查看狀態 Stack: corosync Current DC: node1 (version 1.1.16-12.el7_4.4-94ff4df) - partition with quorum Last updated: Sat Oct 28 12:36:03 2017 Last change: Thu Oct 26 11:15:07 2017 by root via cibadmin on node1 2 nodes configured 0 resources configured Online: [ node1 node2 ] No resources ##pacemaker和corosync正常運行。

8、配置資源

crm(live)# configure crm(live)configure# primitive mfs_drbd ocf:linbit:drbd params drbd_resource=mysql op monitor role=Master interval=10 timeout=20 op monitor role=Slave interval=20 timeout=20 op start timeout=240 op stop timeout=100 ##配置drbd資源 crm(live)configure# verify crm(live)configure# ms ms_mfs_drbd mfs_drbd meta master-max="1" master-node-max="1" clone-max="2" clone-node-max="1" notify="true" crm(live)configure# verify crm(live)configure# commit

9、配置掛載資源

crm(live)configure# primitive mystore ocf:heartbeat:Filesystem params device=/dev/drbd1 directory=/usr/local/mfs fstype=xfs op start timeout=60 op stop timeout=60 crm(live)configure# verify crm(live)configure# colocation ms_mfs_drbd_with_mystore inf: mystore ms_mfs_drbd crm(live)configure# order ms_mfs_drbd_before_mystore Mandatory: ms_mfs_drbd:promote mystore:start

10、配置mfs資源:

crm(live)configure# primitive mfs systemd:mfsmaster op monitor timeout=100 interval=30 op start timeout=30 interval=0 op stop timeout=30 interval=0 crm(live)configure# colocation mfs_with_mystore inf: mfs mystore crm(live)configure# order mystor_befor_mfs Mandatory: mystore mfs crm(live)configure# verify WARNING: mfs: specified timeout 30 for start is smaller than the advised 100 WARNING: mfs: specified timeout 30 for stop is smaller than the advised 100 crm(live)configure# commit

11、配置VIP:

crm(live)configure# primitive vip ocf:heartbeat:IPaddr params ip=172.25.0.100 crm(live)configure# colocation vip_with_msf inf: vip mfs crm(live)configure# verify crm(live)configure# commit

12、show查看

crm(live)configure# show node 1: node1 attributes standby=off node 2: node2 primitive mfs systemd:mfsmaster op monitor timeout=100 interval=30 op start timeout=30 interval=0 op stop timeout=30 interval=0 primitive mfs_drbd ocf:linbit:drbd params drbd_resource=mysql op monitor role=Master interval=10 timeout=20 op monitor role=Slave interval=20 timeout=20 op start timeout=240 interval=0 op stop timeout=100 interval=0 primitive mystore Filesystem params device="/dev/drbd1" directory="/usr/local/mfs" fstype=xfs op start timeout=60 interval=0 op stop timeout=60 interval=0 primitive vip IPaddr params ip=172.25.0.100 ms ms_mfs_drbd mfs_drbd meta master-max=1 master-node-max=1 clone-max=2 clone-node-max=1 notify=true colocation mfs_with_mystore inf: mfs mystore order ms_mfs_drbd_before_mystore Mandatory: ms_mfs_drbd:promote mystore:start colocation ms_mfs_drbd_with_mystore inf: mystore ms_mfs_drbd order mystor_befor_mfs Mandatory: mystore mfs colocation vip_with_msf inf: vip mfs property cib-bootstrap-options: have-watchdog=false dc-version=1.1.16-12.el7_4.4-94ff4df cluster-infrastructure=corosync cluster-name=mycluster stonith-enabled=false migration-limit=1

###查看各服務狀態

crm(live)# status

Stack: corosync

Current DC: node1 (version 1.1.16-12.el7_4.4-94ff4df) - partition with quorum

Last updated: Sat Oct 28 15:24:26 2017

Last change: Sat Oct 28 15:24:20 2017 by root via cibadmin on node1

2 nodes configured

5 resources configured

Online: [ node1 node2 ]

Full list of resources:

Master/Slave Set: ms_mfs_drbd [mfs_drbd]

Masters: [ node1 ]

Slaves: [ node2 ]

mystore(ocf::heartbeat:Filesystem):Started node1

mfs(systemd:mfsmaster):Started node1

vip(ocf::heartbeat:IPaddr):Started node1##可以發現所有服務都在node1起來了

二、check servers安裝與配置

1、在node3和node4上同樣的操作:(下面只在node3上演示)

[root@node3 ~]# yum install zlib-devel -y ##安裝依賴包 [root@node3 ~]# cd /usr/local/src/ [root@node3 src]# wget https://github.com/moosefs/moosefs/archive/v3.0.96.tar.gz ###下載mfs包 [root@node3 src]# useradd mfs [root@node3 src]# tar zxvf v3.0.96.tar.gz [root@node3 src]# cd moosefs-3.0.96/ [root@node3 moosefs-3.0.96]# ./configure --prefix=/usr/local/mfs --with-default-user=mfs --with-default-group=mfs --disable-mfsmaster --disable-mfsmount [root@node3 moosefs-3.0.96]# make && make install [root@node3 moosefs-3.0.96]# cd /usr/local/mfs/etc/mfs/ [root@node3 mfs]# cp mfschunkserver.cfg.sample mfschunkserver.cfg [root@node3 mfs]# vim mfschunkserver.cfg MASTER_HOST = 172.25.0.100 ##添加mfsmaster的虛擬ip

2、配置mfshdd.cfg主配置文件

mfshdd.cfg該文件用來設置你將 Chunk Server 的哪個目錄共享出去給 Master Server進行管理。當然,雖然這裏填寫的是共享的目錄,但是這個目錄後面最好是一個單獨的分區。

[root@node3 mfs]# cp /usr/local/mfs/etc/mfs/mfshdd.cfg.sample /usr/local/mfs/etc/mfs/mfshdd.cfg [root@node3 mfs]# vim /usr/local/mfs/etc/mfs/mfshdd.cfg /mfsdata ###添加存儲數據的目錄

3、啟動check Server:

[root@node3 mfs]# mkdir /mfsdata [root@node3 mfs]# chown mfs:mfs /mfsdata/ You have new mail in /var/spool/mail/root [root@node3 mfs]# /usr/local/mfs/sbin/mfschunkserver start

三、客戶端掛載文件安裝:

在node5上

1、安裝FUSE:

[root@node5 mfs]# yum install fuse fuse-devel -y [root@node5 ~]# modprobe fuse [root@node5 ~]# lsmod |grep fuse fuse 91874 0

2、安裝掛載客戶端

[root@node6 ~]# yum install zlib-devel -y [root@node6 ~]# useradd mfs [root@node6 src]# tar -zxvf v3.0.96.tar.gz [root@node6 src]# cd moosefs-3.0.96/ [root@node6 moosefs-3.0.96]# ./configure --prefix=/usr/local/mfs --with-default-user=mfs --with-default-group=mfs --disable-mfsmaster --disable-mfschunkserver --enable-mfsmount [root@node6 moosefs-3.0.96]# make && make install

3、在客戶端上掛載文件系統,先創建掛載目錄:

[root@node6 moosefs-3.0.96]# mkdir /mfsdata [root@node6 moosefs-3.0.96]# chown -R mfs:mfs /mfsdata/

四、測試

1、測試vip掛載

在node1上看一下vip1是否存在

[root@node1 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:49:e9:da brd ff:ff:ff:ff:ff:ff

inet 172.25.0.29/24 brd 172.25.0.255 scope global ens33

valid_lft forever preferred_lft forever

inet 172.25.0.100/24 brd 172.25.0.255 scope global secondary ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe49:e9da/64 scope link

###可以看到vip已經在node1起來的

在node5上測試掛載寫入:

[root@node5 ~]# /usr/local/mfs/bin/mfsmount /mfsdata -H 172.25.0.100 -p MFS Password: ##輸入在mfsmaster設置的密碼 mfsmaster accepted connection with parameters: read-write,restricted_ip,map_all ; root mapped to 1004:1004 ; users mapped to 1004:1004 [root@node5 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/cl-root 18G 1.9G 17G 11% / devtmpfs 226M 0 226M 0% /dev tmpfs 237M 0 237M 0% /dev/shm tmpfs 237M 4.6M 232M 2% /run tmpfs 237M 0 237M 0% /sys/fs/cgroup /dev/sda1 1014M 139M 876M 14% /boot tmpfs 48M 0 48M 0% /run/user/0 172.25.0.100:9421 36G 4.1G 32G 12% /mfsdata

##可以發現 moosefs已經通過虛擬ip掛載在/mfsdata上:

[root@node5 ~]# cd /mfsdata/ [root@node5 mfsdata]# touch xiaozhang.txt [root@node5 mfsdata]# echo xiaozhang > xiaozhang.txt [root@node5 mfsdata]# [root@node5 mfsdata]# cat xiaozhang.txt xiaozhang

##可以發現寫入數據成功,目的達到

2、高可用測試

我們先在node5把磁盤umount掉

[root@node5 ~]# umount -l /mfsdata/ [root@node5 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/cl-root 18G 1.9G 17G 11% / devtmpfs 226M 0 226M 0% /dev tmpfs 237M 0 237M 0% /dev/shm tmpfs 237M 4.6M 232M 2% /run tmpfs 237M 0 237M 0% /sys/fs/cgroup /dev/sda1 1014M 139M 876M 14% /boot tmpfs 48M 0 48M 0% /run/user/0

然後去到node1把模式改為下面我們把node1設置為standby:

[root@node1 ~]# crm

crm(live)# node standby

crm(live)# status

crm(live)# status

Stack: corosync

Current DC: node1 (version 1.1.16-12.el7_4.4-94ff4df) - partition with quorum

Last updated: Sat Oct 28 16:10:18 2017

Last change: Sat Oct 28 16:10:04 2017 by root via crm_attribute on node1

2 nodes configured

5 resources configured

Node node1: standby

Online: [ node2 ]

Full list of resources:

Master/Slave Set: ms_mfs_drbd [mfs_drbd]

Masters: [ node2 ]

Stopped: [ node1 ]

mystore(ocf::heartbeat:Filesystem):Started node2

mfs(systemd:mfsmaster):Started node2

vip(ocf::heartbeat:IPaddr):Started node2##可以發現所有服務都已經切換到node2上了

去到node2上查看一下資源

vip

[root@node2 system]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:64:00:b1 brd ff:ff:ff:ff:ff:ff

inet 172.25.0.30/24 brd 172.25.0.255 scope global ens33

valid_lft forever preferred_lft forever

inet 172.25.0.100/24 brd 172.25.0.255 scope global secondary ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe64:b1/64 scope link

valid_lft forever preferred_lft forever磁盤掛載狀況

[root@node2 system]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/cl-root 18G 2.5G 16G 14% / devtmpfs 226M 0 226M 0% /dev tmpfs 237M 39M 198M 17% /dev/shm tmpfs 237M 8.6M 228M 4% /run tmpfs 237M 0 237M 0% /sys/fs/cgroup /dev/sda1 1014M 197M 818M 20% /boot tmpfs 48M 0 48M 0% /run/user/0 /dev/drbd1 2.0G 40M 2.0G 2% /usr/local/mfs ###drbd已經在node2掛載

mfs服務狀態

[root@node2 system]# systemctl status mfsmaster

mfsmaster.service - Cluster Controlled mfsmaster

Loaded: loaded (/etc/systemd/system/mfsmaster.service; disabled; vendor preset: disabled)

Drop-In: /run/systemd/system/mfsmaster.service.d

└─50-pacemaker.conf

Active: active (running) since Sat 2017-10-28 16:10:17 CST; 6min ago

Process: 12283 ExecStart=/usr/local/mfs/sbin/mfsmaster start (code=exited, status=0/SUCCESS)

Main PID: 12285 (mfsmaster)

CGroup: /system.slice/mfsmaster.service

└─12285 /usr/local/mfs/sbin/mfsmaster start###可以看到服務狀態是running狀態的

現在我們去到node5上掛載看看

[root@node5 ~]# /usr/local/mfs/bin/mfsmount /mfsdata -H 172.25.0.100 -p MFS Password: mfsmaster accepted connection with parameters: read-write,restricted_ip,map_all ; root mapped to 1004:1004 ; users mapped to 1004:1004 [root@node5 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/cl-root 18G 1.9G 17G 11% / devtmpfs 226M 0 226M 0% /dev tmpfs 237M 0 237M 0% /dev/shm tmpfs 237M 4.6M 232M 2% /run tmpfs 237M 0 237M 0% /sys/fs/cgroup /dev/sda1 1014M 139M 876M 14% /boot tmpfs 48M 0 48M 0% /run/user/0 172.25.0.100:9421 36G 4.2G 32G 12% /mfsdata [root@node5 ~]# cat /mfsdata/xiaozhang.txt xiaozhang

也可以發現數據還是存在的,說明我們的基於corosync和pacemaker+drbd實現mfs高可用是可實現的。

基於corosync和pacemaker+drbd實現mfs高可用